In this notebook, I will demonstrate how to use monotonicity constraints in the popular open source gradient boosting package XGBoost to train an interpretable and accurate nonlinear classifier on the UCI credit card default data. Monotonicity constraints can turn opaque, complex models into transparent, and potentially regulator-approved models, by ensuring predictions only increase or only decrease for any change in a given input variable. Why you should care about debugging machine learning modelsĮnhancing Transparency in Machine Learning Models with Python and XGBoost - Notebook.Warning Signs: Security and Privacy in an Age of Machine Learning.Real-World Strategies for Model Debugging.Proposed Guidelines for the Responsible Use of Explainable Machine Learning.Proposals for model vulnerability and security.On the Art and Science of Explainable Machine Learning.

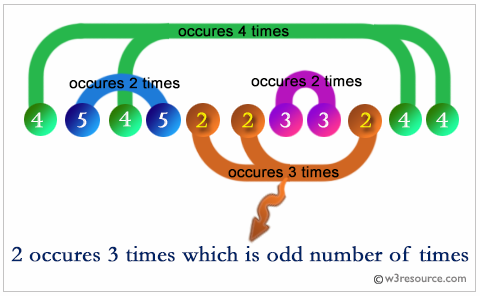

An Introduction to Machine Learning Interpretability, 2nd Edition.A Responsible Machine Learning Workflow with Focus on Interpretable Models, Post-hoc Explanation, and Discrimination Testing.Machine Learning: Considerations for fairly and transparently expanding access to credit.Detailed model comparison and model selection by cross-validated ranking.Advanced residual analysis for model debugging.Advanced sensitivity analysis for model debugging.Decision tree surrogates, reason codes, and ensembles of explanations.Monotonic XGBoost models, partial dependence, individual conditional expectation plots, and Shapley explanations.The notebooks highlight techniques such as:

#Python tsearch explanation series#

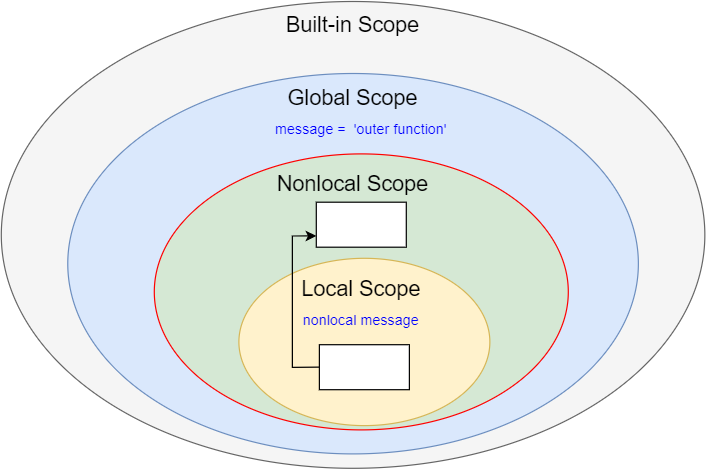

If you are a data scientist or analyst and you want to train accurate, interpretable ML models, explain ML models to your customers or managers, test those models for security vulnerabilities or social discrimination, or if you have concerns about documentation, validation, or regulatory requirements, then this series of Jupyter notebooks is for you! (But please don't take these notebooks or associated materials as legal compliance advice.) This series of notebooks introduces several approaches that increase transparency, accountability, and trustworthiness in ML models. Unfortunately, recent studies and recent events have drawn attention to mathematical and sociological flaws in prominent weak AI and ML systems, but practitioners don’t often have the right tools to pry open ML models and debug them. While these predictive systems can be quite accurate, they have often been inscrutable and unappealable black boxes that produce only numeric predictions with no accompanying explanations.

Usage of artificial intelligence (AI) and ML models is likely to become more commonplace as larger swaths of the economy embrace automation and data-driven decision-making. Examples of techniques for training interpretable machine learning (ML) models, explaining ML models, and debugging ML models for accuracy, discrimination, and security.

0 kommentar(er)

0 kommentar(er)